Draft:AI Scaling Wall

| Draft article not currently submitted for review.

This is a draft Articles for creation (AfC) submission. It is not currently pending review. While there are no deadlines, abandoned drafts may be deleted after six months. To edit the draft click on the "Edit" tab at the top of the window. To be accepted, a draft should:

It is strongly discouraged to write about yourself, your business or employer. If you do so, you must declare it. Where to get help

How to improve a draft

You can also browse Wikipedia:Featured articles and Wikipedia:Good articles to find examples of Wikipedia's best writing on topics similar to your proposed article. Improving your odds of a speedy review To improve your odds of a faster review, tag your draft with relevant WikiProject tags using the button below. This will let reviewers know a new draft has been submitted in their area of interest. For instance, if you wrote about a female astronomer, you would want to add the Biography, Astronomy, and Women scientists tags. Editor resources

Last edited by Citation bot (talk | contribs) 5 days ago. (Update) |

The AI Scaling Wall is a debated concept in artificial intelligence (AI) research that describes the observed limitations in improving AI performance through the traditional approach of scaling—expanding machine learning (ML) model size, training data, and computational power. While scaling has historically driven significant advances in AI capabilities, such as the development of large language models (LLMs) and state-of-the-art computer vision systems, researchers have noted diminishing returns in performance as these models grow larger. This phenomenon raises concerns that current AI development strategies may face fundamental barriers, requiring new approaches to sustain progress.

Key factors contributing to the AI Scaling Wall include computational and energy constraints, the limited availability of high-quality training data, and inherent architectural inefficiencies in existing AI models. These challenges have prompted discussions about the environmental impacts of AI, the economic feasibility of scaling, and the accessibility of AI technology for smaller organizations. Researchers and industry leaders are exploring alternative strategies, such as improving data efficiency, developing novel neural network architectures, and enhancing models' reasoning abilities, to overcome these limitations and continue advancing the field.

Background

[edit]

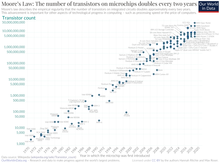

The AI scaling laws are empirical observations that describe the predictable relationship between the size of machine learning models, the amount of training data, the computational power used, and their resulting performance. These laws came to prominence in the late 2010s and early 2020s through research conducted by AI organizations like OpenAI and DeepMind, which demonstrated that increasing model size and the amount of training data often led to consistent improvements across a range of tasks, including NLP and computer vision. Scaling laws highlighted that many performance gains could be achieved by simply expanding resources, driving the development of large-scale neural networks, such as GPT-3 and BERT.

Model performance depends most strongly on scale, which consists of three factors: the number of model parameters N (excluding embeddings), the size of the dataset D, and the amount of compute C used for training.

— Kaplan et al.[1]

Concept

[edit]The AI Scaling Wall refers to the hypothesized limit at which increases in the resources provided to an AI model, such as its size, the amount of data used to train it, and the computing power used to train it, create far smaller improvements in its performance compared with those created by increases in resources before this limit, or no further improvements whatsoever. Scaling laws, which describe the predictable relationship between these factors and AI performance, have guided the development of increasingly large and sophisticated models. However, researchers have identified potential constraints that could impede this progress, including diminishing returns from additional compute, the exhaustion of high-quality training data, and physical or economic barriers to scaling infrastructure.

Research such as "Scaling Laws for Neural Language Models" by OpenAI has demonstrated that larger models improve performance in tasks like natural language processing, but these gains follow a power-law relationship, where the returns shrink as scale increases. Other studies, such as those from DeepMind and Anthropic, have explored the theoretical and practical challenges of sustaining this trend. As a result, the concept of the AI Scaling Wall has sparked discussions among researchers and industry leaders about the need for new paradigms, such as more efficient algorithms, neuromorphic computing, or domain-specific innovations, to continue advancing AI capabilities.

Our findings reveal that across concepts, significant improvements in zero-shot performance require exponentially more data

— Udandarao et al.[2]

History

[edit]

The term "artificial intelligence" was coined in 1955 by John McCarthy in his proposal for the Dartmouth workshop.[3][4] AI research continued through the rest of the 20th century in cycles of developments and optimism, called "AI springs", and periods of stagnation and pessimism, known as "AI winters".[5] The resurgence of AI began in the late 1990s and early 2000s, enabled by advancements in hardware, data availability, and algorithms.[6][7] It progressed into the deep learning revolution in the 2010s,[8][9] showcased by Google DeepMind’s AlphaGo successfully defeating a human Go champion in 2016.[10][11]

Transformer architectures were introduced as an evolution of deep learning by the landmark 2017 paper Attention Is All You Need.[12][13][14] Transformers enabled the development of LLMs, most of which are based upon them.[15][16][17][18] LLMs, such as OpenAI's ChatGPT, rapidly gained a large user base and amount of press coverage following their introduction in late 2022.[19][20][21] These quick developments in AI capabilities highlighted challenges, including algorithmic bias, environmental impact, and other ethical concerns.[22][23][24]

In November 2024, reports emerged of diminishing returns in AI models being developed by various companies, leading to discussion of the concept of the "AI Scaling Wall." Investors and executives associated with AI companies acknowledged these difficulties, and some said AI models could be scaled more effectively using new methods, such as test-time compute. However, these alternative approaches come with their own difficulties and trade-offs and don't solve existing issues such as hallucinations.

Causes

[edit]Diminishing returns from model scaling

[edit]Diminishing returns from model scaling contribute significantly to the AI Scaling Wall by limiting the performance improvements achieved through increasing model size and computational power. Research on deep learning systems, such as large transformer models, has shown that while scaling up models can reduce error rates and improve performance, the rate of improvement follows a sublinear trend, meaning that each incremental increase in resources yields progressively smaller gains. This phenomenon is particularly evident in tasks like natural language processing and image recognition, where additional parameters and training data eventually provide minimal enhancements to accuracy or generalization. These diminishing returns suggest that continued reliance on scaling alone is not a sustainable strategy for advancing artificial intelligence capabilities, highlighting the need for alternative approaches to improve efficiency and performance.

Computational constraints

[edit]

Computational constraints are a critical factor contributing to the AI Scaling Wall, as the resources required to train and deploy large-scale artificial intelligence models grow exponentially with their size. High-performance hardware, such as graphics processing units (GPUs) and tensor processing units (TPUs), faces limitations in terms of power efficiency, heat dissipation, and physical scalability, which can hinder further increases in computational capacity. Additionally, the energy costs associated with training massive models are becoming a significant barrier, with larger systems requiring extensive electricity and specialized cooling infrastructure. These constraints not only impact the feasibility of continuing to scale AI models but also raise concerns about the environmental and economic sustainability of current scaling strategies. Addressing these issues may require breakthroughs in hardware design, such as the adoption of neuromorphic computing or quantum computing, to circumvent the physical and practical limitations of existing technologies.

Exhaustion of training data

[edit]

The exhaustion of high-quality training data is a significant factor contributing to the AI Scaling Wall, as many large-scale artificial intelligence models require vast datasets to achieve their performance. Most publicly available data suitable for training, such as text, images, and videos, has already been extensively utilized, leaving diminishing opportunities for novel or diverse datasets to drive further improvements. Moreover, the reliance on redundant or lower-quality data can lead to issues such as overfitting or reduced generalization capabilities. Ethical and legal considerations, such as data privacy laws and copyright concerns, further limit the ability to collect or use additional data. This scarcity of training data poses a bottleneck for scaling AI systems, emphasizing the need for alternative methods like self-supervised learning or synthetic data generation to supplement and enhance available resources. In January 2025, Elon Musk, a co-founder of OpenAI and the founder of xAI, said that human-created data had been "exhausted" and AI models would have to begin using synthetic data to train AI models.[25][26]

Implications

[edit]The realization of the AI Scaling Wall carries significant implications for the development and application of AI. If scaling reaches practical or theoretical limits, the field may need to shift focus from increasing model size and computational power to more efficient and innovative techniques. This could accelerate research into alternative approaches, such as neuromorphic computing, hybrid AI models that integrate symbolic reasoning, and optimization algorithms designed to improve data efficiency. The emphasis on innovation may also reduce reliance on resource-intensive methods, potentially addressing concerns about the environmental impact of computing and making AI development more sustainable in the long term.

Economically, the AI Scaling Wall may exacerbate disparities between large technology companies and smaller organizations. As the costs of scaling increase without proportional performance gains, smaller players in the AI ecosystem may find it harder to compete. This could lead to further concentration of AI capabilities within a few well-funded entities, raising concerns about AI governance and equitable access to technology. On the other hand, a focus on more efficient and specialized systems could democratize AI development, enabling broader participation and innovation. Societally, the limitations imposed by the scaling wall highlight the need for responsible AI practices, prioritizing safety, alignment with human values, and addressing artificial intelligence ethics in a future where brute-force scaling is no longer a viable pathway for progress.

Proposed solutions

[edit]Alternative computing paradigms

[edit]

Alternative computing paradigms such as quantum computing and neuromorphic computing have been proposed as potential solutions to mitigate the challenges of the AI Scaling Wall. Quantum computing leverages quantum-mechanical phenomena to perform computations far beyond the capabilities of classical computers, offering the potential to optimize complex algorithms and accelerate processes like matrix calculations critical in machine learning workflows. Meanwhile, neuromorphic computing seeks to emulate the structure and functionality of biological neural systems, enabling energy-efficient processing and the ability to handle tasks like perception and decision-making with reduced computational demands. These paradigms represent a departure from traditional von Neumann architecture, potentially overcoming limitations in current AI scaling by providing more efficient and scalable approaches to computation.

Improved training algorithms

[edit]Improved training algorithms are a key avenue for mitigating the challenges posed by the AI Scaling Wall by enhancing the efficiency and effectiveness of machine learning systems without requiring massive increases in computational resources. Techniques such as federated learning, self-supervised learning, and sparse model architectures aim to optimize the use of data and computation during training. These innovations can reduce the reliance on large-scale training data and high-power hardware, while improving model generalization and performance. For example, advances in optimization methods, such as adaptive gradient algorithms and dynamic learning rate adjustments, allow for faster convergence and better use of available resources. By making AI systems more efficient and less dependent on brute-force scaling, these training improvements offer a sustainable pathway to advance artificial intelligence capabilities.

Test-time compute

[edit]Test-time compute offers a strategy to mitigate the AI Scaling Wall by shifting computational intensity from the training phase to the inference phase, enabling improved model performance without requiring ever-larger models or datasets. Unlike traditional machine learning systems, which often rely on fixed resources during inference, test-time compute dynamically allocates additional computational resources to process inputs more effectively. Techniques such as adaptive computation time and ensemble learning enable models to tailor their complexity based on the task or input, improving efficiency and accuracy. By leveraging greater computational power during inference, test-time compute allows AI systems to maintain high performance while reducing the reliance on expensive scaling during the training process.

Perspectives and interpretations

[edit]

Some people involved with AI companies, such as Sam Altman, the CEO of OpenAI,[27] Jensen Huang, the CEO of Nvidia,[28][29] and Eric Schmidt, the former CEO of Google,[30] have denied that there is a bottleneck limiting improvements to the performance of AI models with traditional resource scaling. However, others, such as Ilya Sutskever, a co-founder of OpenAI,[31] Robert Nishihara, a co-founder of Anyscale,[32] and Anjney Midha, a partner at Andreessen Horowitz,[32] have said that there is a limit to the progress that can be made with this approach. Furthermore, several prominent figures in AI in industry, such as Altman,[33] Satya Nadella, the CEO of Microsoft,[32] and Sundar Pichai, the CEO of Google,[34] have said that new methods are required to continue to improve the performance of AI models.

External links

[edit]- Is the Tech Industry Already on the Cusp of an A.I. Slowdown?

- AI is hitting a wall just as the hype around it reaches the stratosphere

- Current AI scaling laws are showing diminishing returns, forcing AI labs to change course

- AI’s $100bn question: The scaling ceiling

References

[edit]- ^ Kaplan, Jared; McCandlish, Sam; Henighan, Tom; Brown, Tom B.; Chess, Benjamin; Child, Rewon; Gray, Scott; Radford, Alec; Wu, Jeffrey; Amodei, Dario (23 Jan 2020). "Scaling Laws for Neural Language Models". arXiv Machine Learning. arXiv:2001.08361.

- ^ Udandarao, Vishaal; Prabhu, Ameya; Ghosh, Adhiraj; Sharma, Yash; Torr, Philip H.S.; Bibi, Adel; Albanie, Samuel; Bethge, Matthias (4 Apr 2024). "No "Zero-Shot" Without Exponential Data: Pretraining Concept Frequency Determines Multimodal Model Performance". arXiv Machine Learning. arXiv:2404.04125.

- ^ Cordeschi, Roberto (25 April 2007). "AI Turns Fifty: Revisiting ITS Origins". Applied Artificial Intelligence. 21 (4–5): 259–279. doi:10.1080/08839510701252304.

As is well known, the expression artificial intelligence was introduced by John McCarthy in the 1955 document proposing the Dartmouth Conference.

- ^ van Assen, Marly; Muscogiuri, Emanuele; Tessarin, Giovanni; De Cecco, Carlo N. (2022). "Artificial Intelligence: A Century-Old Story". In De Cecco, Carlo N.; van Assen, Marly; Leiner, Tim (eds.). Artificial Intelligence in Cardiothoracic Imaging. Cham: Springer International Publishing AG. p. 5. ISBN 978-3-030-92086-9.

- ^ Mitchell, Melanie (26 June 2021). "Why AI is harder than we think". In Chicano, Francisco (ed.). Proceedings of the Genetic and Evolutionary Computation Conference. New York, NY, United States: Association for Computing Machinery. p. 3. ISBN 978-1-4503-8350-9.

Since its beginning in the 1950s, the field of artificial intelligence has cycled several times between periods of optimistic predictions and massive investment ("AI Spring") and periods of disappointment, loss of confidence, and reduced funding ("AI Winter").

- ^ Hardcastle, Kimberley (23 August 2023). "We're talking about AI a lot right now – and it's not a moment too soon". The Conversation. Archived from the original on 23 January 2025. Retrieved 23 January 2025.

Limited resources and computational power available at the time hindered growth and adoption. But breakthroughs in machine learning, neural networks, and data availability fuelled a resurgence of AI around the early 2000s.

- ^ Carletti, Vincenzo; Greco, Antonio; Percannella, Gennaro; Vento, Mario (1 September 2020). "Age from Faces in the Deep Learning Revolution". IEEE Transactions on Pattern Analysis and Machine Intelligence. 42 (9). IEEE Computer Society: 2113–2132. doi:10.1109/TPAMI.2019.2910522. PMID 30990174.

- ^ Sejnowski, Terrence J. (2018). The Deep Learning Revolution. Cambridge, Massachusetts London, England: The MIT Press. ISBN 978-0262038034.

- ^ Dean, Jeffrey (1 May 2022). "A Golden Decade of Deep Learning: Computing Systems & Applications". Daedalus. 151 (2): 58–74. doi:10.1162/daed_a_01900.

- ^ Jiang, Yuchen; Li, Xiang; Luo, Hao; Yin, Shen; Kaynak, Okyay (7 March 2022). "Quo vadis artificial intelligence?". Discover Artificial Intelligence. 2 (1). doi:10.1007/s44163-022-00022-8.

- ^ Purtill, James (24 October 2023). "'The most shocking thing I've ever seen': How one move in an ancient board game changed our view of AI". ABC News. Archived from the original on 23 January 2025. Retrieved 23 January 2025.

- ^ Love, Julia (11 July 2023). "AI Researcher Who Helped Write Landmark Paper Is Leaving Google". Bloomberg. Archived from the original on 11 Jul 2023. Retrieved 24 January 2025.

- ^ Toews, Rob (3 Sep 2023). "Transformers Revolutionized AI. What Will Replace Them?". Forbes. Archived from the original on 28 Dec 2023.

- ^ Murgia, Madhumita (23 July 2023). "Transformers: the Google scientists who pioneered an AI revolution". Financial Times. Archived from the original on 15 May 2024. Retrieved 24 January 2025.

- ^ Minaee, Shervin; Mikolov, Tomas; Nikzad, Narjes; Chenaghlu, Meysam; Socher, Richard; Amatriain, Xavier; Gao, Jianfeng (9 Feb 2024). "Large Language Models: A Survey". arXiv Computation and Language: 12–13. arXiv:2402.06196.

- ^ Stevenson, Mark (10 December 2024). "Large language models: how the AI behind the likes of ChatGPT actually works". The Conversation. Archived from the original on 11 Dec 2024. Retrieved 24 January 2025.

- ^ Levy, Steven (20 March 2024). "8 Google Employees Invented Modern AI. Here's the Inside Story". Wired. Archived from the original on 22 Nov 2024. Retrieved 24 January 2025.

- ^ "What are Large Language Models? | NVIDIA Glossary". Nvidia. Archived from the original on 24 January 2025. Retrieved 24 January 2025.

- ^ Gruenhagen, Jan Henrik; Sinclair, Peter M.; Carroll, Julie-Anne; Baker, Philip R.A.; Wilson, Ann; Demant, Daniel (December 2024). "The rapid rise of generative AI and its implications for academic integrity: Students' perceptions and use of chatbots for assistance with assessments". Computers and Education: Artificial Intelligence. 7: 100273. doi:10.1016/j.caeai.2024.100273.

- ^ Gerken, Tom (23 January 2025). "ChatGPT back online after outage which hit thousands worldwide". BBC News. Archived from the original on 24 Jan 2025. Retrieved 24 January 2025.

- ^ Dunn, Will (6 August 2024). "When the AI bubble bursts". New Statesman. Archived from the original on 6 Aug 2024.

- ^ Ungoed-Thomas, Jon; Abdulahi, Yusra (25 August 2024). "Warnings AI tools used by government on UK public are 'racist and biased'". The Observer. Archived from the original on 25 January 2025. Retrieved 25 January 2025.

- ^ Griffin, Andrew (8 November 2024). "AI is terrible for the environment, study finds". The Independent. Archived from the original on 25 January 2025. Retrieved 25 January 2025.

- ^ "Kate Bush joins campaign against AI using artists' work without permission". The Guardian. 12 December 2024. Archived from the original on 12 Dec 2024. Retrieved 25 January 2025.

- ^ Milmo, Dan (9 January 2025). "Elon Musk says all human data for AI training 'exhausted'". The Guardian. Archived from the original on 9 Jan 2025. Retrieved 21 January 2025.

- ^ Wiggers, Kyle (9 January 2025). "Elon Musk agrees that we've exhausted AI training data". TechCrunch. Archived from the original on 17 Jan 2025. Retrieved 21 January 2025.

- ^ Scammell, Robert (14 Nov 2024). "Sam Altman says 'there is no wall' in an apparent response to fears of an AI slowdown". Business Insider. Archived from the original on 14 Nov 2024. Retrieved 21 January 2025.

- ^ "Nvidia's boss dismisses fears that AI has hit a wall". The Economist. 21 Nov 2024. Archived from the original on 23 Nov 2024.

- ^ Zeff, Maxwell (7 January 2025). "Exclusive: Nvidia CEO says his AI chips are improving faster than Moore's Law". TechCrunch. Archived from the original on 8 Jan 2025. Retrieved 21 January 2025.

- ^ Nolan, Beatrice (15 Nov 2024). "Eric Schmidt says there's 'no evidence' AI scaling laws are stopping — but they will eventually". Business Insider. Archived from the original on 15 Nov 2024. Retrieved 21 January 2025.

- ^ Hu, Krystal; Tong, Anna (15 Nov 2024). "OpenAI and others seek new path to smarter AI as current methods hit limitations". Reuters. Archived from the original on 15 Nov 2024.

- ^ a b c Zeff, Maxwell (20 November 2024). "Current AI scaling laws are showing diminishing returns, forcing AI labs to change course". TechCrunch. Archived from the original on 10 Dec 2024.

- ^ Knight, Will (17 Apr 2023). "OpenAI's CEO Says the Age of Giant AI Models Is Already Over". Wired. Archived from the original on 25 Apr 2023.

- ^ Langley, Hugh (5 Dec 2024). "Google CEO Sundar Pichai says AI progress will get harder in 2025 because 'the low-hanging fruit is gone'". Business Insider. Archived from the original on 6 Dec 2024. Retrieved 21 January 2025.