Wikipedia:WikiProject Conservatism

| This is a WikiProject, an area for focused collaboration among Wikipedians. New participants are welcome; please feel free to participate!

|

Become a member!

Welcome to WikiProject Conservatism! Whether you're a newcomer or regular, you'll receive encouragement and recognition for your achievements with conservatism-related articles. This project does not extol any point of view, political or otherwise, other than that of a neutral documentarian. Partly due to this, the project's scope has long become that of conservatism broadly construed, taking in a healthy periphery of (e.g., more academic) articles for contextualization.

- Do you have a question?

Major alerts

A broad collection of discussions that could lead to significant changes of related articles

|

|---|

|

Did you know

Articles for deletion

Categories for discussion

Redirects for discussion

Files for discussion

Good article nominees

Featured article reviews

Good article reassessments

Requests for comments

Peer reviews

Requested moves

Articles to be merged

Articles to be split

Updated daily by AAlertBot — Discuss? / Report bug? / Request feature?

Click to watch (Subscribe via |

Watchlists

WatchHot (Excerpt)

| ||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

These are the articles that have been edited the most within the last seven days. Last updated 20 June 2025 by HotArticlesBot.

List of abbreviations (help):

20 June 2025

19 June 2025

18 June 2025

|

WatchPop (Excerpt)

| |||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

This is a list of pages in the scope of Wikipedia:WikiProject Conservatism along with pageviews. To report bugs, please write on the Community tech bot talk page on Meta. ListPeriod: 2025-05-01 to 2025-05-31 Total views: 72,291,566 Updated: 12:04, 6 June 2025 (UTC)

List of abbreviations (help):

20 June 2025

19 June 2025

18 June 2025

17 June 2025

|

Alternative watchlist prototypes (Excerpts)

| ||||||

|---|---|---|---|---|---|---|

Publications watchlist prototype beneath this line:

List of abbreviations (help):

20 June 2025

19 June 2025

18 June 2025

17 June 2025

16 June 2025

15 June 2025

14 June 2025

13 June 2025

Watchlist of journalists, bloggers, commentators etc., beneath this line:

List of abbreviations (help):

20 June 2025

19 June 2025

Organizations watchlist beneath this line:

List of abbreviations (help):

20 June 2025

19 June 2025

18 June 2025

17 June 2025

Prototype political parties watchlist beneath this line:

List of abbreviations (help):

20 June 2025

19 June 2025

Prototype politicians watchlist beneath this line:

List of abbreviations (help):

20 June 2025

Prototype MISC (drafts, templates etc.) watchlist beneath this line:

List of abbreviations (help):

20 June 2025

19 June 2025

18 June 2025

17 June 2025

|

New articles

A list of semi-related articles that were recently created

|

|---|

|

This list was generated from these rules. Questions and feedback are always welcome! The search is being run daily with the most recent ~14 days of results. Note: Some articles may not be relevant to this project. Rules | Match log | Results page (for watching) | Last updated: 2025-06-19 20:28 (UTC) Note: The list display can now be customized by each user. See List display personalization for details.

|

In The Signpost

One of various articles to this effect

| ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

DISCUSSION REPORT

WikiProject Conservatism Comes Under Fire

By Lionelt WikiProject Conservatism was a topic of discussion at the Administrators' Noticeboard/Incident (AN/I). Objective3000 started a thread where he expressed concern regarding the number of RFC notices posted on the Discussion page suggesting that such notices "could result in swaying consensus by selective notification." Several editors participated in the relatively abbreviated six hour discussion. The assertion that the project is a "club for conservatives" was countered by editors listing examples of users who "profess no political persuasion." It was also noted that notification of WikiProjects regarding ongoing discussions is explicitly permitted by the WP:Canvassing guideline. At one point the discussion segued to feedback about The Right Stuff. Member SPECIFICO wrote: "One thing I enjoy about the Conservatism Project is the handy newsletter that members receive on our talk pages." Atsme praised the newsletter as "first-class entertainment...BIGLY...first-class...nothing even comes close...it's amazing." Some good-natured sarcasm was offered with Objective3000 observing, "Well, they got the color right" and MrX's followup, "Wow. Yellow is the new red." Admin Oshwah closed the thread with the result "definitely not an issue for ANI" and directing editors to the project Discussion page for any further discussion. Editor's note: originally the design and color of The Right Stuff was chosen to mimic an old, paper newspaper. Add the Project Discussion page to your watchlist for the "latest RFCs" at WikiProject Conservatism (Discuss this story)

ARTICLES REPORT

Margaret Thatcher Makes History Again

By Lionelt Margaret Thatcher is the first article promoted at the new WikiProject Conservatism A-Class review. Congratulations to Neveselbert. A-Class is a quality rating which is ranked higher than GA (Good article) but the criteria are not as rigorous as FA (Featued article). WikiProject Conservatism is one of only two WikiProjects offering A-Class review, the other being WikiProject Military History. Nominate your article here. (Discuss this story)

RECENT RESEARCH

Research About AN/I

By Lionelt Reprinted in part from the April 26, 2018 issue of The Signpost; written by Zarasophos

Out of over one hundred questioned editors, only twenty-seven (27%) are happy with the way reports of conflicts between editors are handled on the Administrators' Incident Noticeboard (AN/I), according to a recent survey . The survey also found that dissatisfaction has varied reasons including "defensive cliques" and biased administrators as well as fear of a "boomerang effect" due to a lacking rule for scope on AN/I reports. The survey also included an analysis of available quantitative data about AN/I. Some notable takeaways:

In the wake of Zarasophos' article editors discussed the AN/I survey at The Signpost and also at AN/I. Ironically a portion of the AN/I thread was hatted due to "off-topic sniping." To follow-up the problems identified by the research project the Wikimedia Foundation Anti-Harassment Tools team and Support and Safety team initiated a discussion. You can express your thoughts and ideas here. (Discuss this story)Delivered: ~~~~~

WikiProject Conservatism Is Wikipedia Politically Biased? Perhaps

A monthly overview of recent academic research about Wikipedia and other Wikimedia projects, also published as the Wikimedia Research Newsletter.

Report by conservative think-tank presents ample quantitative evidence for "mild to moderate" "left-leaning bias" on WikipediaA paper titled "Is Wikipedia Politically Biased?"[1] answers that question with a qualified yes:

The author (David Rozado, an associate professor at Otago Polytechnic) has published ample peer-reviewed research on related matters before, some of which was featured e.g. in The Guardian and The New York Times. In contrast, the present report is not peer-reviewed and was not posted in an academic venue, unlike most research we cover here usually. Rather, it was published (and possibly commissioned) by the Manhattan Institute, a conservative US think tank, which presumably found its results not too objectionable. (Also, some – broken – URLs in the PDF suggest that Manhattan Institute staff members were involved in the writing of the paper.) Still, the report indicates an effort to adhere to various standards of academic research publications, including some fairly detailed descriptions of the methods and data used. It is worth taking it more seriously than, for example, another recent report that alleged a different form of political bias on Wikipedia, which had likewise been commissioned by an advocacy organization and authored by an academic researcher, but was met with severe criticism by the Wikimedia Foundation (who called it out for "unsubstantiated claims of bias") and volunteer editors (see prior Signpost coverage). That isn't to say that there can't be some questions about the validity of Rozado's results, and in particular about how to interpret them. But let's first go through the paper's methods and data sources in more detail. Determining the sentiment and emotion in Wikipedia's coverageThe report's main results regarding Wikipedia are obtained as follows:

The sentiment classification rates the mention of a terms as negative, neutral or positive. (For the purpose of forming averages this is converted into a quantitative scale from -1 to +1.) See the end of this review for some concrete examples from the paper's published dataset. The emotion classification uses The annotation method used appears to be an effort to avoid the shortcomings of popular existing sentiment analysis techniques, which often only rate the overall emotional stance of a given text overall without determining whether it actually applies to a specific entity mentioned in it (or in some cases even fail to handle negations, e.g. by classifying "I am not happy" as a positive emotion). Rozado justifies the Selecting topics to examine for biasAs for the selection of terms whose Wikipedia coverage to annotate with this classifier, Rozado does a lot of due diligence to avoid cherry-picking: Overall, the study arrives at 12 different groups of such terms:

What is "left-leaning" and "right-leaning"?As discussed, Rozado's methods for generating these lists of people and organizations seem reasonably transparent and objective. It gets a bit murkier when it comes to splitting them into "left-leaning" and "right-leaning", where the chosen methods remain unclear and/or questionable in some cases. Of course there is a natural choice available for US Congress members, where the confines of the US two-party system mean that the left-right spectrum can be easily mapped easily to Democrats vs. Republicans (disregarding a small number of independents or libertarians). In other cases, Rozado was able to use external data about political leanings, e.g. "a list of politically aligned U.S.-based journalists" from Politico. There may be questions about construct validity here (e.g. it classifies Glenn Greenwald or Andrew Sullivan as "journalists with the left"), but at least this data is transparent and determined by a source not invested in the present paper's findings. But for example the

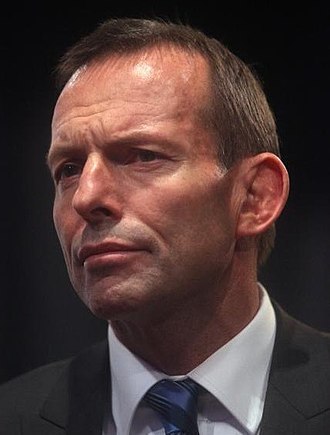

list of UK MPs used contains politicians from 14 different parties (plus independents). Even if one were to confine the left vs. right labels to the two largest groups in the UK House of Commons (Tories vs. Labour and Co-operative Party, which appears to have been the author's choice judging from Figure 5), the presence of a substantial number of parliamentarians from other parties to the left or right of those would make the validity of this binary score more questionable than in the US case. Rozado appears to acknowledge a related potential issue in a side remark when trying to offer an explanation for one of the paper's negative results (no bias) in this case: This kind of question become even more complicated for the "Leaders of Western Countries" list (where Tony Abbott scored the most negative average sentiment, and José Luis Rodríguez Zapatero and Scott Morrison appear to be in a tie for the most positive average sentiment). Most of these countries do not have a two-party system either. Sure, their leaders usually (like in the UK case) hail from one of the two largest parties, one of which is more to the left and the another more to the right. But it certainly seems to matter for the purpose of Rozado's research question whether that major party is more moderate (center-left or center-right, with other parties between it and the far left or far right) or more radical (i.e. extending all the way to the far-left or far-right spectrum of elected politicians). What's more, the analysis for this last group compares political orientations across multiple countries. Which brings us to a problem that Wikipedia's Jimmy Wales had already pointed to back in 2006 in response a conservative US blogger who had argued that there was "a liberal bias in many hot-button topic entries" on English Wikipedia:

We already discussed this issue in our earlier reviews of a notable series of papers by Greenstein and Zhu (see e.g.: "Language analysis finds Wikipedia's political bias moving from left to right", 2012), which had relied on a US-centric method of defining left-leaning and right-leaning (namely, a corpus derived from the US Congressional Record). Those studies form a large part of what Rozado cites as "[a] substantial body of literature [that]—albeit with some exceptions—has highlighted a perceived bias in Wikipedia content in favor of left-leaning perspectives." (The cited exception is a paper[2] that had found "a small to medium size coverage bias against [members of parliament] from the center-left parties in Germany and in France", and identified patterns of "partisan contributions" as a plausible cause.) Similarly, 8 out of the 10 groups of people and organizations analyzed in Rozado's study are from the US (the two exceptions being the aforementioned lists of UK MPs and leaders of Western countries). In other words, one potential reason for the disparities found by Rozado might simply be that he is measuring an international encyclopedia with a (largely) national yardstick of fairness. This shouldn't let us dismiss his findings too easily. But it is a bit disappointing that this possibility is nowhere addressed in the paper, even though Rozado diligently discusses some other potential limitations of the results. E.g. he notes that Another limitation is that a simple binary left vs. right classification might be hiding factors that can shed further light on bias findings. Even in the US with its two-party system, political scientists and analysts have long moved to less simplistic measures of political orientations. A widely used one is the NOMINATE method which assigns members of the US Congress continuous scores based on their detailed voting record, one of which corresponds to the left-right spectrum as traditionally understood. One finding based on that measure that seems relevant in context of the present study is the (widely discussed but itself controversial) asymmetric polarization thesis, which argues that "Polarization among U.S. legislators is asymmetric, as it has primarily been driven by a substantial rightward shift among congressional Republicans since the 1970s, alongside a much smaller leftward shift among congressional Democrats" (as summarized in the linked Wikipedia article). If, for example, higher polarization was associated with negative sentiments, this could be a potential explanation for Rozado's results. Again, this has to remain speculative, but it seems another notable omission in the paper's discussion of limitations. What does "bias" mean here?A fundamental problem of this study, which, to be fair, it shares with much fairness and bias research (in particular on Wikipedia's gender gap, where many studies similarly focus on binary comparisons that are likely to successfully appeal to an intuitive sense of fairness) consists of justifying its answers to the following two basic questions:

Regarding 1 (defining a baseline of unbiasedness), Rozado simply assumes that this should imply statistically indistinguishable levels of average sentiment between left and right-leaning terms. However, as cautioned by one leading scholar on quantitative measures of bias, "the 'one true fairness definition' is a wild goose chase" – there are often multiple different definitions available that can all be justified on ethical grounds, and are often contradictory. Above, we already alluded to two potentially diverging notions of political unbiasedness for Wikipedia (using an international instead of US metric for left vs right leaning, and taking into account polarization levels for politicians). But yet another question, highly relevant for Wikipedians interested in addressing the potential problems reported in this paper, is how much its definition lines up with Wikipedia's own definition of neutrality. Rozado clearly thinks that it does:

WP:NPOV indeed calls for avoiding subjective language and expressing judgments and opinions in Wikipedia's own voice, and Rozado's findings about the presence of non-neutral sentiments and emotions in Wikipedia articles are of some concern in that regard. However, that is not the core definition of NPOV. Rather, it refers to "representing fairly, proportionately, and, as far as possible, without editorial bias, all the significant views that have been published by reliable sources on a topic." What if the coverage of the terms examined by Rozado (politicians, etc.) in those reliable sources, in their aggregate, were also biased in the sense of Rozado's definition? US progressives might be inclined to invoke the snarky dictum "reality has a liberal bias" by comedian Stephen Colbert. Of course, conservatives might object that Wikipedia's definition of reliable sources (having All this brings us to question 2. above (causality). While Rozado uses carefully couched language in this regard ("suggests" etc, e.g. Commendably, the paper is accompanied by a published dataset, consisting of the analyzed Wikipedia text snippets together with the mentioned term and the sentiment or emotion identified by the automated annotation. For illustration, below are the sentiment ratings for mentions of the Yankee Institute for Public Policy (the last term in the dataset, as a non-cherry-picked example), with the term bolded:

Briefly

Other recent publicationsOther recent publications that could not be covered in time for this issue include the items listed below. Contributions, whether reviewing or summarizing newly published research, are always welcome. How English Wikipedia mediates East Asian historical disputes with Habermasian communicative rationalityFrom the abstract: [3]

From the paper:

(See also excerpts) Facebook/Meta's "No Language Left Behind" translation model used on WikipediaFrom the abstract of this publication by a large group of researchers (most of them affiliated with Meta AI):[4]

"Which Nigerian-Pidgin does Generative AI speak?" – only the BBC's, not Wikipedia'sFrom the abstract:[5]

The paper's findings are consistent with an analysis by the Wikimedia Foundation's research department that compared the number of Wikipedia articles to the number of speakers for the top 20 most-spoken languages, where Naija stood out as one of the most underrepresented. "[A] surprising tension between Wikipedia's principle of safeguarding against self-promotion and the scholarly norm of 'due credit'"From the abstract:[6]

See also coverage of a different paper that likewise analyzed Wikipedia's coverage of CRISPR: "Wikipedia as a tool for contemporary history of science: A case study on CRISPR" "How article category in Wikipedia determines the heterogeneity of its editors"From the abstract:[7]

"What do they make us see:" The "cultural bias" of GLAMs is worse on WikidataFrom the abstract:[8]

References

|

ToDo List

Miscellaneous tasks

|

|---|

A list of articles needing cleanup associated with this project is available. See also the tool's wiki page and the index of WikiProjects.

Categories to look through

Translation ToDo

Requested articles (in general)

Merging ToDo

|